Up to now, the ‘Protect Your Microsoft 365 Data in the Age of AI’ series consists of the following posts:

- Protect your Microsoft 365 data in the age of AI: Introduction

- Protect your Microsoft 365 data in the age of AI: Prerequisites

- Protect your Microsoft 365 data in the age of AI: Gaining Insight

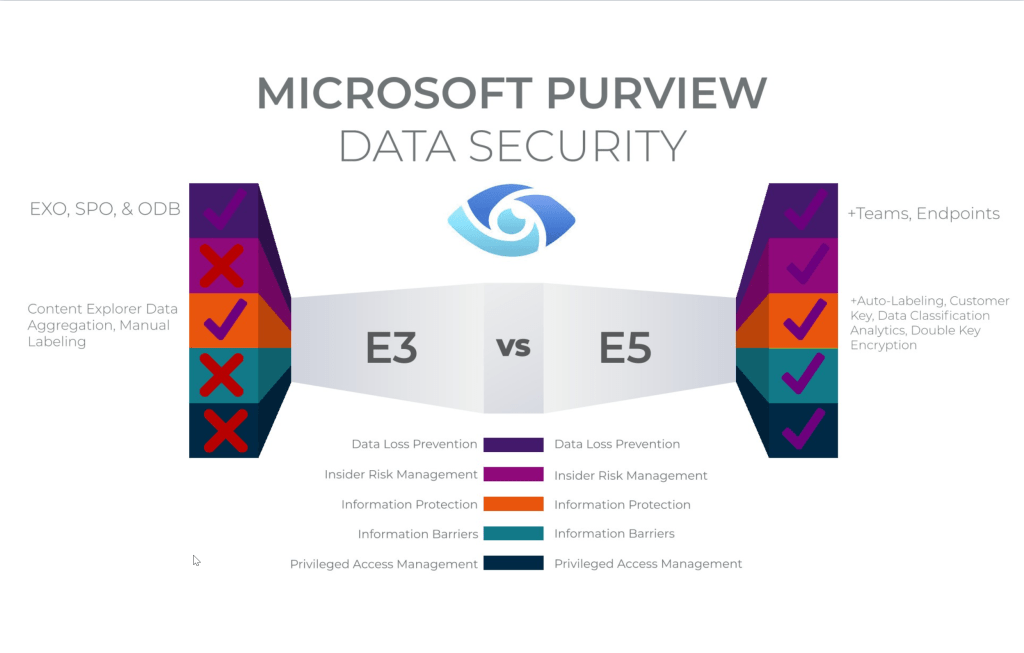

- Protect your Microsoft 365 data in the age of AI: Licensing

- Protect your Microsoft 365 data in the age of AI: Prohibit labeled info to be used by M365 Copilot

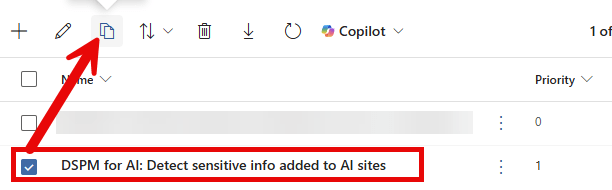

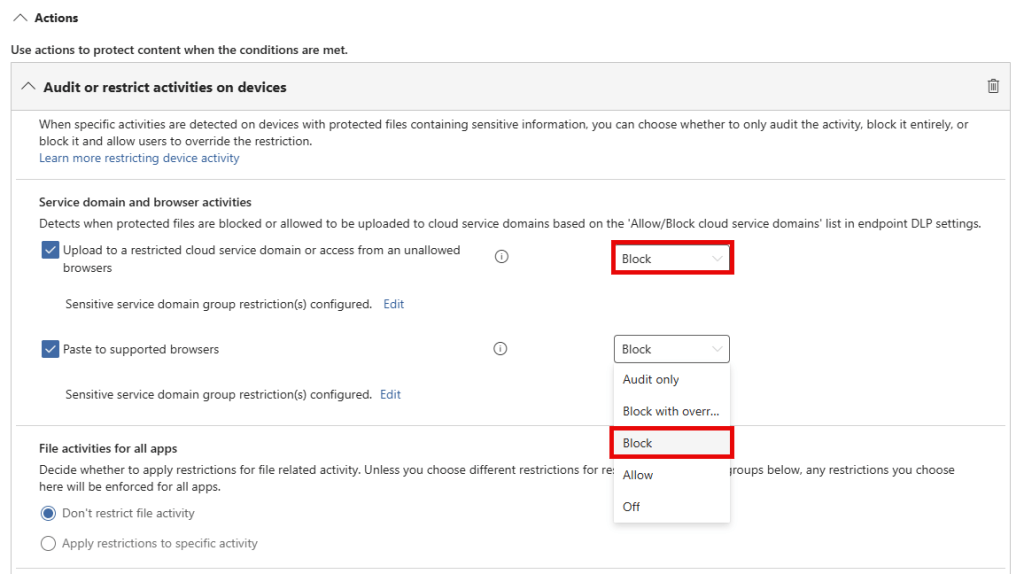

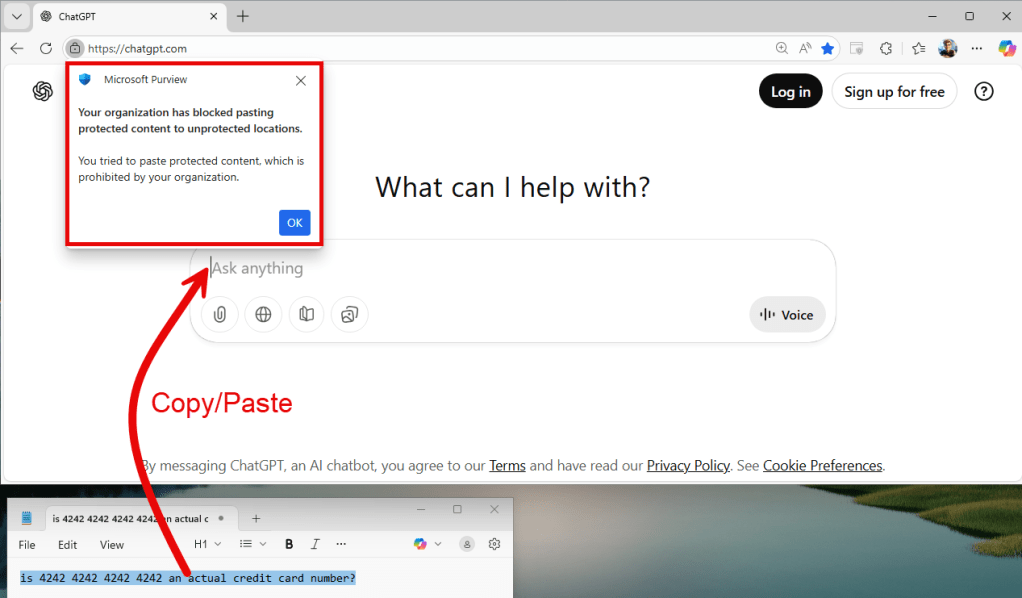

- Protect Your Microsoft 365 data in the age of AI: Prevent sensitive data from being shared with 3rd party AI

- Protect Your Microsoft 365 data in the age of AI: Block Access to Generative AI sites

- Protect Your Microsoft 365 data in the age of AI: Wrap-up – The complete picture (This post)

Generative AI is no longer something experimental. It has now become embedded in our daily way of working within Microsoft 365. Tools such as Copilot promise productivity gains, but at the same time, it also drives organizations to finally address something they have been postponing for a long time: data security.

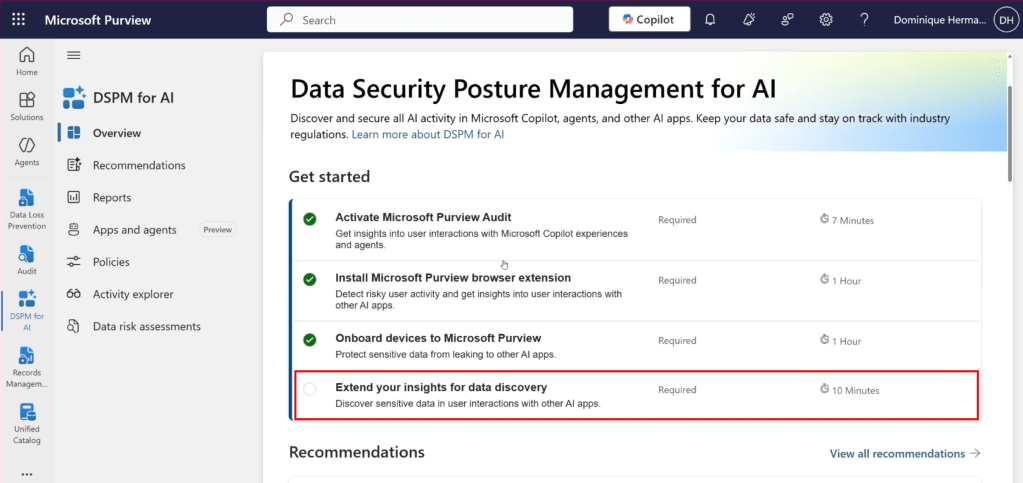

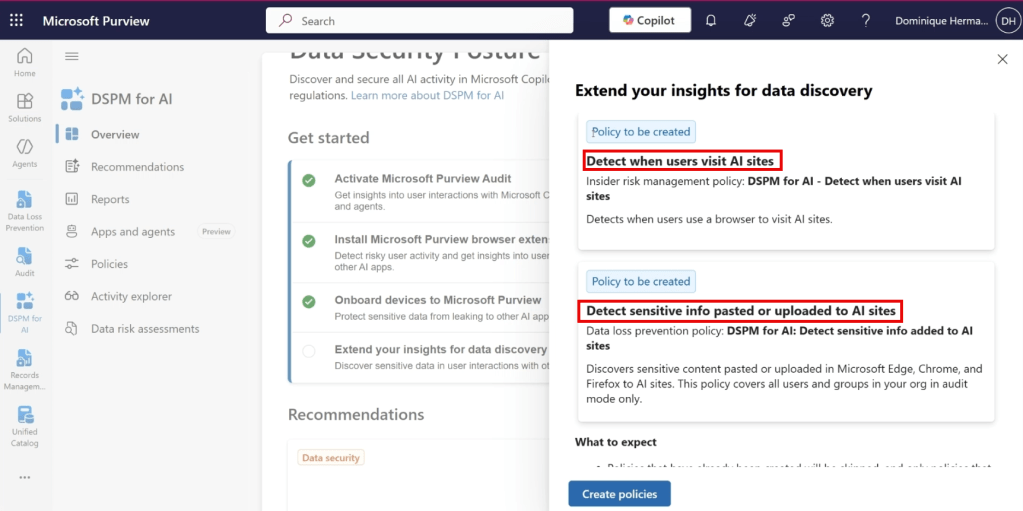

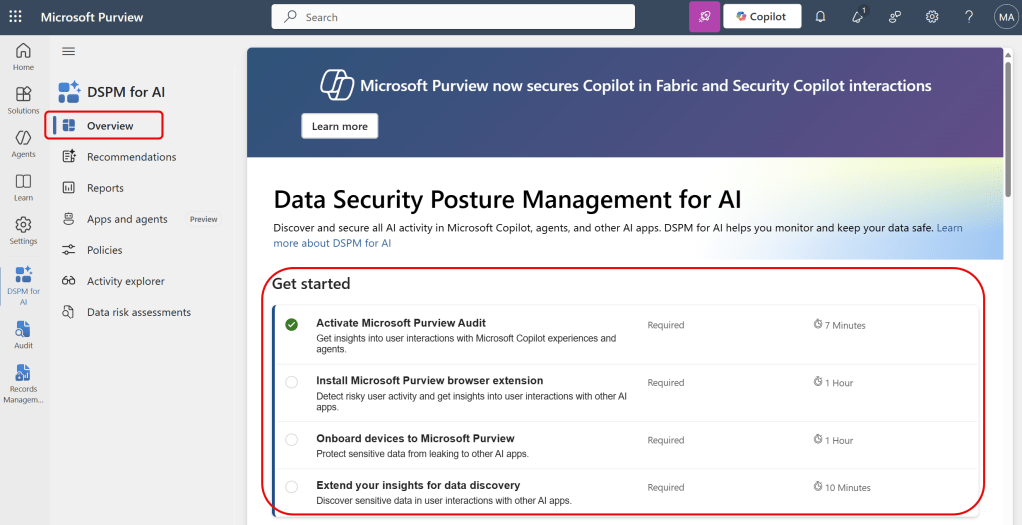

In this blog series, I have shown step by step how you can gain control over protecting your Microsoft 365 data in the age of AI using Microsoft 365, Purview, and Microsoft Defender for Cloud Apps (MDA). In this concluding blog, I bring everything together and outline the complete picture.

Generative AI does not change your data, but it does change the risk.

AI does not introduce new types of data. What does change, however, is the scale and speed at which existing data is used, combined, and reused. Where sensitive information was previously stored in a relatively passive way in emails, documents, and (somewhat less passively) in Teams chats, AI can now rapidly analyse, combine, regenerate, and present it (visually or in text) to users who may not even have known that this information existed, simply because they never searched for it.

Continue reading “Protect Your Microsoft 365 data in the age of AI: The complete picture”